In the pantheon of trick-taking pastimes, the spades card game occupies a unique psychological space. Unlike Bridge, which demands rigorous…

Google Photos offers powerful editing features that make it easy to improve your photos and screenshots right in the app.…

Editing screenshots on a Chromebook is simple and built right into the system. You don’t need extra apps for basic…

People use screenshots every day. Students grab notes or homework pages. Workers save error messages or receipts. Parents capture fun…

Retail banking has entered a make-or-break moment. Customers open accounts, move money, and apply for loans the same way they…

In today’s rapidly evolving world, sustainability and efficiency are more important than ever. Businesses, governments, and individuals are constantly seeking…

The first few weeks after purchasing a premium theme usually feel like a pleasant upgrade: the site becomes neater, the…

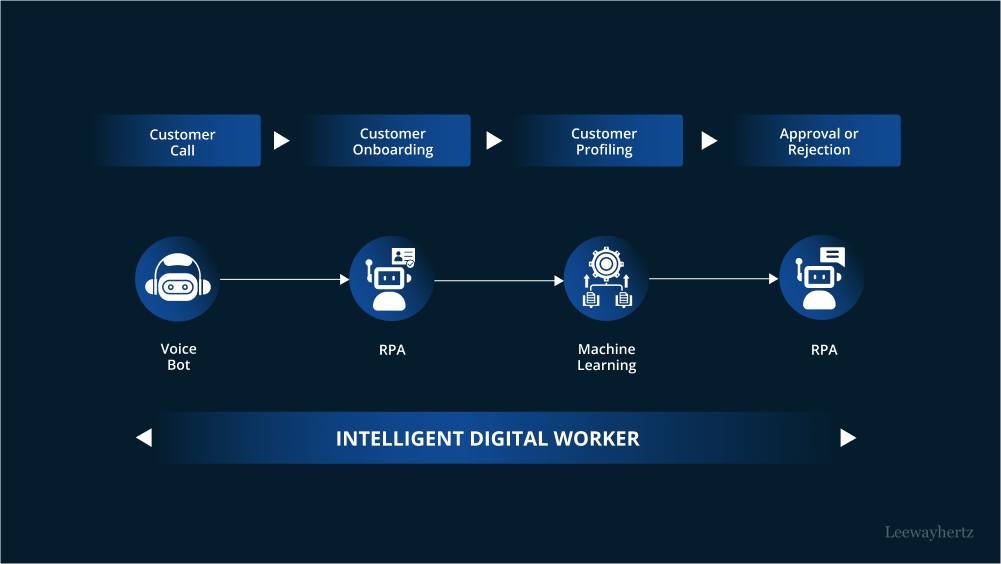

In today’s fast-paced business world, teams often work across departments to meet goals. Using workflow systems to improve cross-team collaboration…

Operational accuracy covers how well a company handles its daily tasks. From making products to serving clients, every step counts.…